The ETL concentrates and gathers information from heterogeneous sources, changes it into a “rest region”, and transfers it to its objective. The number of types exists, the benefits, the distinction with ELT, and a few use instances.

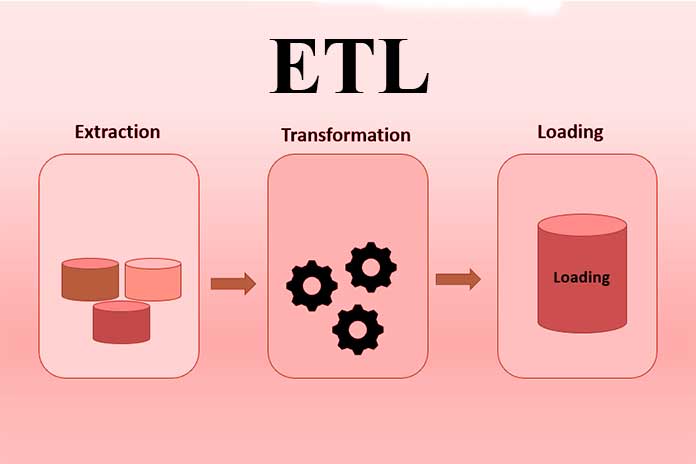

The abbreviation ETL represents Extract, Transform and Load, that is, “Concentrate, Transform and Load”: it alludes to a pipeline, or a few programming parts with a setup progression of activities, which concerns information. The ETL pipeline gathers information from various sources, changes it as indicated by business rules, and burdens it into an objective information store.

What Does ETL Stand For

Concentrate” alludes to the most common way of extracting information from a source, which can be: a SQL data set – Structured Query Language, then, at that point a social data set, with or without OLTP – Online Transaction Processing, the internet handling of exchanges between information; a NoSQL data set; a standard text or XML record; ERP – Enterprise Resource Planning or CRM – Customer Relationship Management frameworks; a cloud stage.

“Change” alludes to the most common way of changing the arrangement or design of a dataset into what is required by the objective framework. The separated information can be chosen, standardized, interpreted, a hotspot for estimating inferred information, “coupled” (join), assembled, expanded: the objective is to make them homogeneous, and in this manner, usable for information examination. The conventional ETL measure changes the information into an arranging region, given a different worker from both the source and the objective.

“Burden” alludes to the most common way of stacking information into the objective framework, either by revamping, which replaces past information or by refreshing, which doesn’t delete existing data. The three periods of the cycle are done in equal, decreasing handling times: information previously separated, while the principal stage isn’t finished, can be changed and stacked.

Various degrees of ETLs compare to multiple degrees of vaults: the main level holds the information in the most incredible detail, from which other ETLs, second level and then some, can begin to construct datasets for explicit examines, including OLAP – Online Analytical Processing, multidimensional online logical handling.

Also Read: Top 5 Software Tools For Small Businesses

What Are The Characteristics Of An ETL

Connectivity

A compelling ETL ought to interface with all information sources previously utilized in the organization. Hence, it ought to be outfitted with coordinated connectors for information bases, various deals and showcasing applications, distinctive document designs, to work with interoperability among frameworks and the development and change of information among source and objective depending on the situation.

Easy To Use Interface

A sans bug and simple to arrange interface is needed to streamline time and cost of utilization to improve the representation of the pipeline.

Error Handling

The ETL handles blunders effectively, guaranteeing information consistency and exactness. What’s more, it offers smooth and effective information change capacities, forestalling information misfortune.

Access To Data In Real-Time

The ETL retrieves data in real-time to ensure timely updates concerning ongoing processes.

Built-In Monitoring

The ETL has an integrated monitoring system that ensures smooth process execution.

ETL Tool: The Differences Between Pipelines

ETL pipelines are sorted dependent on use cases – four of the most widely recognized sorts are clump preparing, ongoing handling, on-reason or cloud handling, contingent upon how and where you need to change the information. The clump preparing pipeline is principally utilized for customary investigation use cases.

Information is intermittently gathered, changed, and moved to a cloud information stockroom for traditional business insight use cases. Clients can rapidly prepare a high-volume report in a cloud data lake or information stockroom and timetable positions for handling negligible human mediation. With cluster preparing, clients gather and store data during a group window, which effectively oversees many information and tedious assignments.

The continuous preparing pipeline permits clients to ingest organized and unstructured information from a broad scope of streaming sources, like IoT, associated gadgets, web-based media takes care of, sensor information and versatile applications, utilizing an advertisement informing framework. High velocity, which guarantees that the data is caught precisely. Information change occurs progressively by using an ongoing preparing motor to drive examination cases like extortion discovery, prescient upkeep, designated advertising efforts, and proactive client assistance. The on-premise preparing pipeline can be conveyed nearby, expanding information security. Many organizations work heritage frameworks that have the two information and archives designed on-premise. The cloud handling pipeline has different cloud-based applications, of which they exploit the adaptability and readiness in the ETL cycle.

Also Read: 5 Software Tools That Optimize Custom Development Pipelines

What Are The Advantages Of The ETL Process?

Defined And Continuous Workflow And Data Quality

The ETL first extracts data from homogeneous or heterogeneous sources, deposits it in a storage area, cleans and transforms it, and finally stores it in a repository, usually a data warehouse. A well-defined and continuous workflow that ensures high-quality data, with advanced profiling and cleaning processes.

Ease Of Use

After choosing the data sources, the ETL tools automatically identify their types and formats, set the extraction and processing rules, and finally upload the data to the destination archive. An automated process that makes coding useless in the traditional sense, where you have to write every procedure and code.

Operational Resilience

Many data warehouses are fragile in operation: ETL tools have built-in error handling functionality that helps data engineers develop a resilient and well-instrumented ETL process.

Support In Complex Data Analysis And Management

ETL tools help move large volumes of data and transfer it in batches. In the case of complicated rules and transformations, they contribute to data analysis, string manipulation, modifications and integrations of multiple datasets.

Quick View Of Processes

ETL tools are based on a graphical user interface (GUI) and offer a visual system logic flow. The GUI allows you to use the drag and drop function to view the data process.

Business Intelligence Improvement

Admittance to information is more straightforward with ETL devices: better admittance to data straightforwardly affects information-driven key and functional choices. The dataset with the ETL interaction is organized and changed: the examination on a solitary predefined use case becomes steady and quick.

Compliance With GDPR And HIPAA Standards

In an ETL process, it is possible to omit any sensitive data before uploading it to the target system: this promotes compliance with the GDPR and the HIPAA – Health Insurance Portability and Accountability Act.

Increased Speed Of Time-To-Value

ETL enables context and data aggregations to generate more revenue and save time, effort, and resources. The ETL process helps increase ROI and monetize data by improving business intelligence.

What Is The Difference between ETL And ELT?

ELT – Extract, Load, and Transform signify “Concentrate, stack and change”: the extricated information is quickly stacked into the objective framework and really at that time changed to be dissected. In the ETL interaction, the change of vast volumes of information can consume a large chunk of the day toward the start since it is vital for stacking.

At the same time, in ELT, it is completed subsequently, so it takes a brief period; however, it can dial back the question and examination measures if it isn’t there. Enough handling power.

Stacking into ELT, since it doesn’t need an arranging region like ETL, is quicker. Both ETL and ELT support information stockrooms. However, while the ETL cycle has been around for quite a long time, ELT is fresher and the environment of apparatuses to execute it is as yet developing.

ETL measures, including an on-premise worker, require regular support, given their proper tables, fixed cutoff times, and the need to over and again select the information to stack and change. Cloud-based, mechanized ETL arrangements require little upkeep. L ‘ ETL on-premises exemplary equipment for the ETL isn’t needed, so it is more costly.

However, it is likewise more effectively agreeable with GDPR and HIPAA norms – Health Insurance Portability and Accountability Act, because any touchy information can be overlooked before transferring. In contrast, in the ELT interaction, all data is transferred to the objective framework. ELT considers pushdown enhancement: in a real sense, “push down” is a presentation improvement method whereby information handling is performed on the data set itself, source side, target side, or totally.

Source-side pushdown improvement dissects the planning from source to objective and creates the assertion in SQL as per this planning: the statement is executed on the source data set, recovers the records and further cycles them to embed and alter the objective information base.

Target-side pushdown advancement creates the assertion in SQL dependent on the planning investigation from focus to source. Complete pushdown enhancement happens when the source and target data sets are on a similar administration framework: contingent upon the pursuit to be played out, the advancement will be performed on the source or target side.